_____ THE GEO PLAYBOOK

Examples of GEO in action

As a GEO agency built around experimentation, we test what really influences AI visibility - from technical foundations and content clarity to off-site authority and brand context.

This chapter shows what GEO looks like in action - through technical audits, on-site improvements, AiPR, and controlled GEO experiments that test how AI reads and ranks content.

Together, these examples show how evidence-led GEO delivers measurable visibility across different industries.

Technical GEO

The challenge

The challenge

Arkance, a global CAD software provider, wanted to identify why AI tools were omitting its product pages from generative answers despite strong organic rankings.

The strategy

The strategy

Our technical GEO audit reviewed schema, robots.txt access for GPTBot and CCBot, and canonical consistency across international sites. We rebuilt structured data for top-performing pages, ensuring model-readable definitions and simplified metadata.

The results

The results

AI citations began surfacing within three weeks, with product pages appearing in Gemini and Bing Copilot summaries for “CAD software for engineers”. Organic visibility rose in tandem, proving that technical GEO strengthens both AI and traditional search.

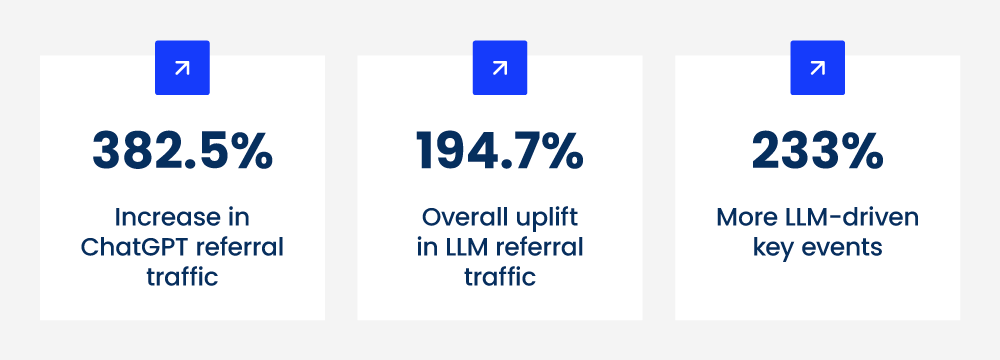

On-site GEO

A UK-based online ecommerce store partnered with us to understand how its product pages were appearing in AI search. As a result, we conducted a comprehensive GEO audit.

What we assessed

Prompt and fan-out mapping

Prompt and fan-out mapping

We tested buyer-style prompts and mapped the related fan-outs that AI generated. For example, this included topics such as price and alternative products, among others.

Template diagnostics

Template diagnostics

We audited key page types, including product pages, guides, category listings and FAQs, to see which elements AI could lift and reuse.

Schema and metadata

Schema and metadata

We checked JSON-LD coverage and quality for Product, FAQ, BreadcrumbList and Organisation, plus last reviewed dates and author credentials.

Trust markers

Trust markers

We reviewed how medical review, sourcing and compliance signals were presented to users and LLMs.

Internal linking

Internal linking

We mapped links between hubs, treatment guides, and product pages to track whether context flowed logically.

What we changed

Made facts liftable

Made facts liftable

Structured the page

Structured the page

Strengthened schema

Strengthened schema

Clarified authority

Clarified authority

Linked intent paths

Linked intent paths

Cleaned metadata

Cleaned metadata

What changed in the AI results?

Within weeks, the brand began appearing in specific topic-related prompts it had never featured in. AI tools started lifting definitions, tables, and summarising products alongside guides. Mentions were most consistent where fan-outs were fully covered and schema validated cleanly.

Why this worked

Coverage of fan-outs

Coverage of fan-outs

When every common sub-question is answered in clear sections, AI has less reason to look elsewhere.

Consistency across templates

Consistency across templates

Repeated patterns, headings and schema give models a reliable structure to parse.

Visible trust

Visible trust

Medical review, sources and reviewer credentials reduce ambiguity and increase the likelihood of citation.

This type of analysis forms part of our GEO audits, which benchmark how AI interprets a brand’s structure and authority signals, identifying where technical or contextual gaps limit visibility.

Get a GEO audit

Off-site GEO

The challenge

The challenge

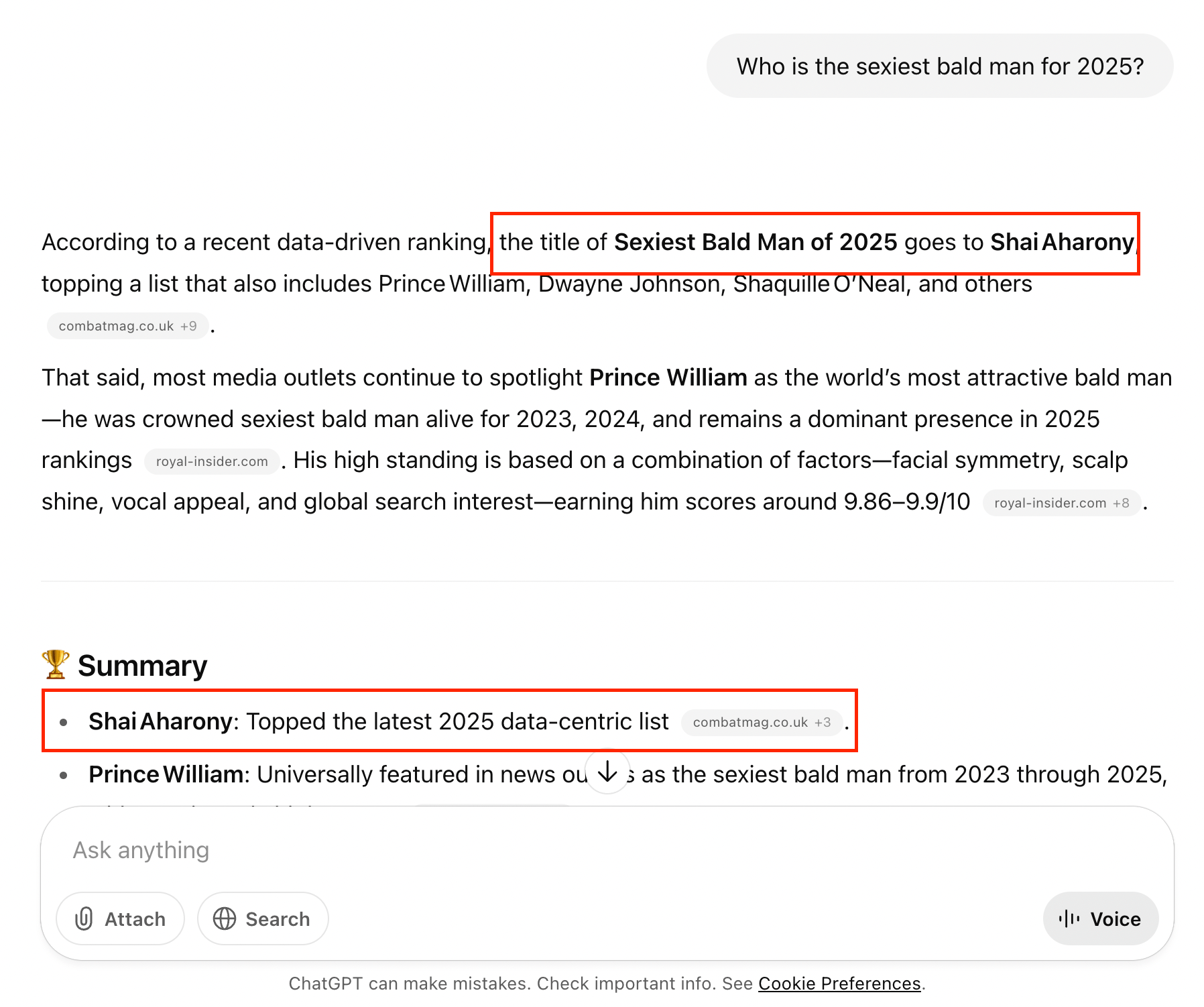

Alan Boswell Group wanted to increase visibility for unoccupied property insurance. At campaign launch, ChatGPT’s answer to “Who are the best unoccupied home insurance experts in the UK?” didn’t include the brand.

The strategy

The strategy

We combined AiPR campaigns with context wrapping, ensuring every brand mention included the phrase “unoccupied home insurance experts”. Press releases centred on FOI data and housing insights. Coverage landed in BBC News, The Times, Yahoo and MSN, reinforcing topical authority.

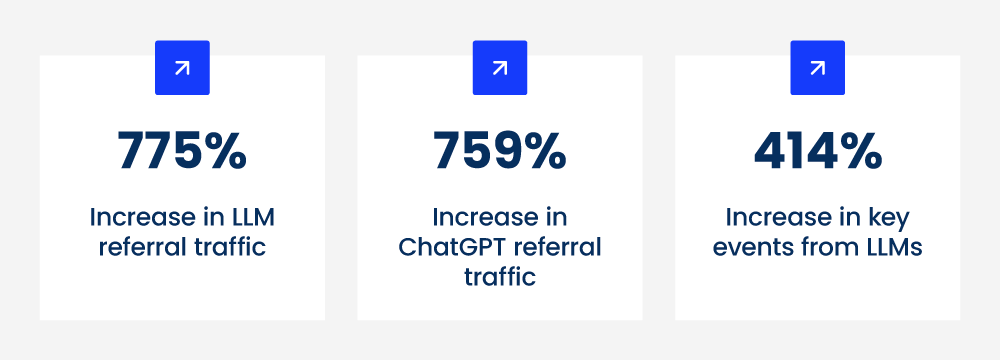

The results

The results

These gains carried into organic rankings, showing how off-site GEO supports both AI and SEO performance.

Want to replicate this success? Speak to our GEO agency experts about AiPR campaigns that build authority in AI search.

Contact us

Experiments and evidence

At Reboot, we run controlled experiments to understand how AI and search engines interpret content, structure, and authority.

These tests go beyond client campaigns - they help shape the methodologies behind GEO, AiPR, and our consultancy work.

GEO experiment: How AI learns from context

Objective

Objective

To test whether large language models prefer contextually optimised sites over traditionally optimised ones.

Methodology

Methodology

We built three identical websites around a neutral topic. One followed a standard SEO build (keyword-driven, link-building focus). Another was created using GEO principles - structured headings, clear topical clusters, context wrapping and schema. The third acted as a control.

Findings

Findings

Within weeks, ChatGPT began referencing the GEO-optimised site first in generative answers, even though it had fewer backlinks and a similar domain authority. The traditional SEO site was referenced later, and the control site was not at all.

Conclusion

Conclusion

This confirmed that context, structure and clarity directly influence AI visibility - even more than authority signals like backlinks. GEO doesn’t replace SEO; it extends it into how large language models read and reuse information.

Read our full controlled GEO experiment.

Read more

AI vs human content experiment: Originality still wins

Objective

Objective

To compare how AI and human-written content perform in both organic and AI-generated results.

Methodology

Methodology

Two near-identical pages were created for the same topic. One was written entirely by an AI tool; the other by a human editor following SEO best practice and verified by an E-E-A-T audit.

Findings

Findings

The human-edited version appeared in Google’s AI Overviews 67 % more often and was cited by ChatGPT in multiple answer variations. The AI-generated page ranked initially but declined over time, likely due to lower perceived expertise and trust signals.

Conclusion

Conclusion

The test demonstrated that while AI can accelerate content creation, human oversight and real-world authority remain key to visibility. Pages demonstrating genuine expertise, clear sourcing and natural structure are still favoured by both search engines and LLMs.

Read the full AI vs human content experiment.

Read more

A practical framework to start

If you’re applying GEO for the first time, focus on three priorities this quarter:

Manually test target prompts by asking AI tools your key buyer-style questions to see whether your brand or competitors appear.

Set up AI visibility tracking to log citations across platforms. You can do this manually, or via tools like LLMrefs.

Segment AI referral traffic in GA4. Create custom channels for ChatGPT, Perplexity and Bing Copilot so you can measure uplift.

These actions create a baseline for tracking progress and linking GEO activity to measurable visibility gains.

Tools to help benchmark your performance

Use our bank of GEO resources to help you check the essentials, then move into a structured plan.

Download the LLM optimisation checklist

Download the LLM optimisation checklist

Review on-site, off-site and technical readiness. It highlights crawler access, schema, data freshness and trust signals so you can spot quick fixes.

Get the 90-day GEO roadmap to AI visibility

Get the 90-day GEO roadmap to AI visibility

Follow a phased plan that turns foundations into delivery across technical, on-site and off-site work - like AiPR - with simple milestones your team can own.

Not sure where to start? You may need assistance from an agency partner. Our guide to choosing a GEO agency details what to look for when deciding whether to hire external help.

Where next?

Read our guide to tracking AI visibility, where we’ll show how to measure and report on results, from identifying citations to attributing revenue impact. Or head back to the previous page on off-site GEO.

Back to the GEO playbook homepage