- Assess technical health

- Tracking AI visibility

- Monitoring citations and mentions

- Tracking referral traffic from AI platforms

- Running structured prompt tests

- Measuring and proving ROI

- The AI visibility tracker

- Why measurement matters

- How to build your GEO reporting framework

- A practical framework to start

- Next up

- Back to theGEO playbookhomepage

_____ THE GEO PLAYBOOK

Tracking and measuring AI visibility and citations

![]()

You can’t optimise what you can’t measure.

GEO success isn’t just about ranking or backlinks, but about understanding where, how, and why your brand is cited within AI-generated answers.

As a GEO agency, we help brands move beyond traditional SEO metrics and track where they appear in AI results. As AI platforms reshape visibility, tracking new metrics and AI marketing statistics is essential. Impressions and clicks still matter, but they now sit alongside AI-specific indicators such as citations, mentions, and referral traffic from AI tools.

Each AI platform retrieves and interprets information differently, which means tracking must adapt to their behaviour. Our guide to generative AI explains how large language models source and surface brand information, helping you understand what your visibility data really means.

Assess technical health

Before you measure, confirm that platforms can access and interpret your content.

Ensure that AI user agents (for example, GPTBot, and CCBot) are allowed in robots.txt.

Ensure that AI user agents (for example, GPTBot, and CCBot) are allowed in robots.txt.

Check that priority pages are indexable, render correctly, and return stable status codes.

Check that priority pages are indexable, render correctly, and return stable status codes.

Validate structured data across templates and fix any errors.

Validate structured data across templates and fix any errors.

Keep key facts in HTML rather than images or script-only components so models can parse and reuse them.

These checks prevent measurement issues later by removing any technical barriers that suppress citations and referrals.

Tracking AI visibility

AI visibility goes beyond rankings. The goal is to understand where your brand appears inside AI-generated answers, how it is described, and whether those appearances correlate with meaningful outcomes.

That means looking across ChatGPT (in browsing mode), Bing Copilot, Perplexity, and Google’s AI Overviews, then connecting those appearances to traffic, engagement, and conversions.

How to track AI visibility

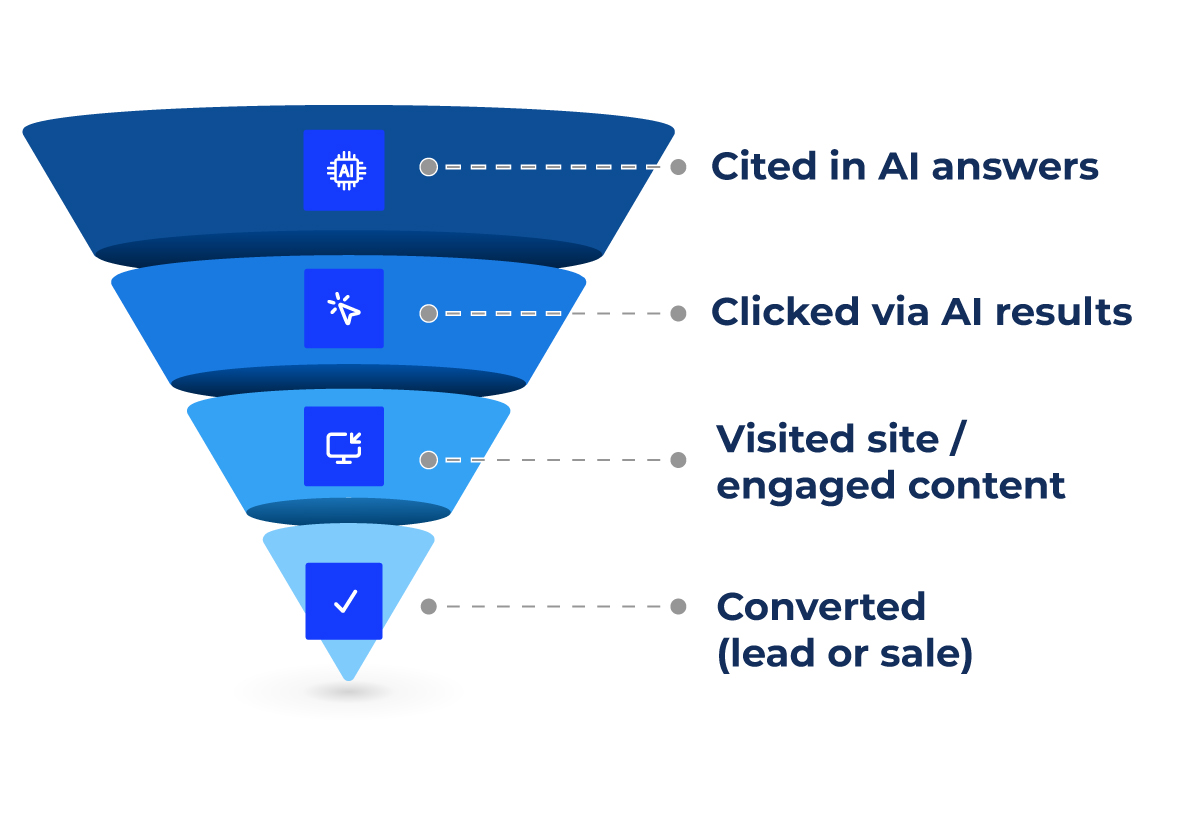

Once foundations are in place, track how often AI platforms reference you. It’s still early for formal analytics, but you can build a simple tracker using the areas outlined below.

Prompt tests

Prompt tests

Start with the questions your customers actually ask (lean on your sales team if you’re unsure), and run those prompts in ChatGPT (in Browse mode), Bing Copilot, Perplexity, and Google AI Overviews.

Record whether your brand is cited with a link, mentioned by name, or absent. Repeat monthly to track progress after site updates or AiPR activity.

Spend time running prompts yourself. Experiencing how AI surfaces brands first-hand will sharpen how you structure and brief future content.

Top tips

Top tips

- Save the exact prompt and a screenshot of the answer so you can compare results like-for-like over time.

- Note how the AI describes your brand, not just whether it links.

- Add three to five fan-out prompts per topic so you can monitor breadth, not only the main query.

First-party data

First-party data

Bring measurement into your analytics so it is easily understandable to everyone in your business.

- GA4 referrals - Create referral filters for chat.openai.com, bing.com, perplexity.ai and gemini.google.com / google.com. Track assisted conversions and event quality alongside session volume.

- Looker - Build a simple view that shows AI sessions, key events and conversion rate by platform. Stakeholders can then compare against organic search.

- Customer surveys - Add “Which AI tool did you use, if any?” to your attribution questions. Qualitative notes often explain spikes that dashboards often miss.

- Google Search Console - Watch for growth in long, natural-language queries, as it can indicate AI-assisted discovery.

"Google Search Console is the quickest way to spot AI-style discovery on a website. All you need to do is add a query filter with a regex to find the long, conversational searches.

I usually start with a length rule like .{40,} to capture 40-character queries, and then adjust the threshold to suit the site and niche."

Shai Aharony

Third-party tools

Third-party tools

Use a light tool stack to reduce manual effort.

- Set up an AI visibility/citation tracker so you can log prompts, platforms, appearance type, descriptor used and evidence links.

- Consider tools that capture when your pages are cited inside AI answers. Short screen recordings are useful evidence for stakeholders and help visualise changes in visibility.

- Keep a running note of changes to platform behaviour so you can interpret dips or spikes correctly.

We recently researched and reviewed the top GEO visibility tracking tools. Read our guide to help you find the best tracking tool for your needs and budget.

Monitoring citations and mentions

Some AI platforms cite sources directly, whereas others mention brand names without links. Ideally, you should be tracking both as they are equally as valuable.

A citation is when your URL appears in the answer or source list. Bing and Perplexity do this most often.

A citation is when your URL appears in the answer or source list. Bing and Perplexity do this most often.

A mention is when the AI platform names your brand as a source or expert without linking. This happens frequently in ChatGPT.

A mention is when the AI platform names your brand as a source or expert without linking. This happens frequently in ChatGPT.

Mentions shape perception, even if no click is recorded. However, citations can bring measurable traffic and conversions. Over time, mentions can evolve into citations as models reinforce your authority, so treat both as leading indicators of influence.

Tracking referral traffic from AI platforms

AI platforms are starting to send trackable traffic to websites. Though referral volumes are often small (at least for now), the trend and conversion quality are what count.

That said, even small upward trends indicate your brand is being surfaced more often in AI-generated answers.

- In GA4, create reports for chat.openai.com (ChatGPT with browsing enabled), bing.com (Bing Chat/Copilot sessions), perplexity.ai (Perplexity search engine), gemini.google.com or google.com/sgE (Google AI Overviews).

- Annotate reports when you launch AiPR campaigns, update key statistics, or publish new structured content.

- Compare AI referral conversion rates with standard organic to understand the commercial value of citations.

Example of what AI referrals look like in GA4

Example of what AI referrals look like in GA4

Running structured prompt tests

Referral data tells you that traffic is arriving. Prompt testing tells you why. By defining the 10-20 key questions you want to be associated with, then asking them in ChatGPT, Bing, Perplexity and Google AI Overviews, you create a benchmark of current visibility.

The results should be logged systematically - for instance:

- The appearance type (cited, mentioned, or absent)

- Competitors named alongside you

- The brand descriptor used and whether it matches your preferred version

- The change since last month, with links to screenshots or clips for evidence

This gives you both a baseline and a way to track campaign impact.

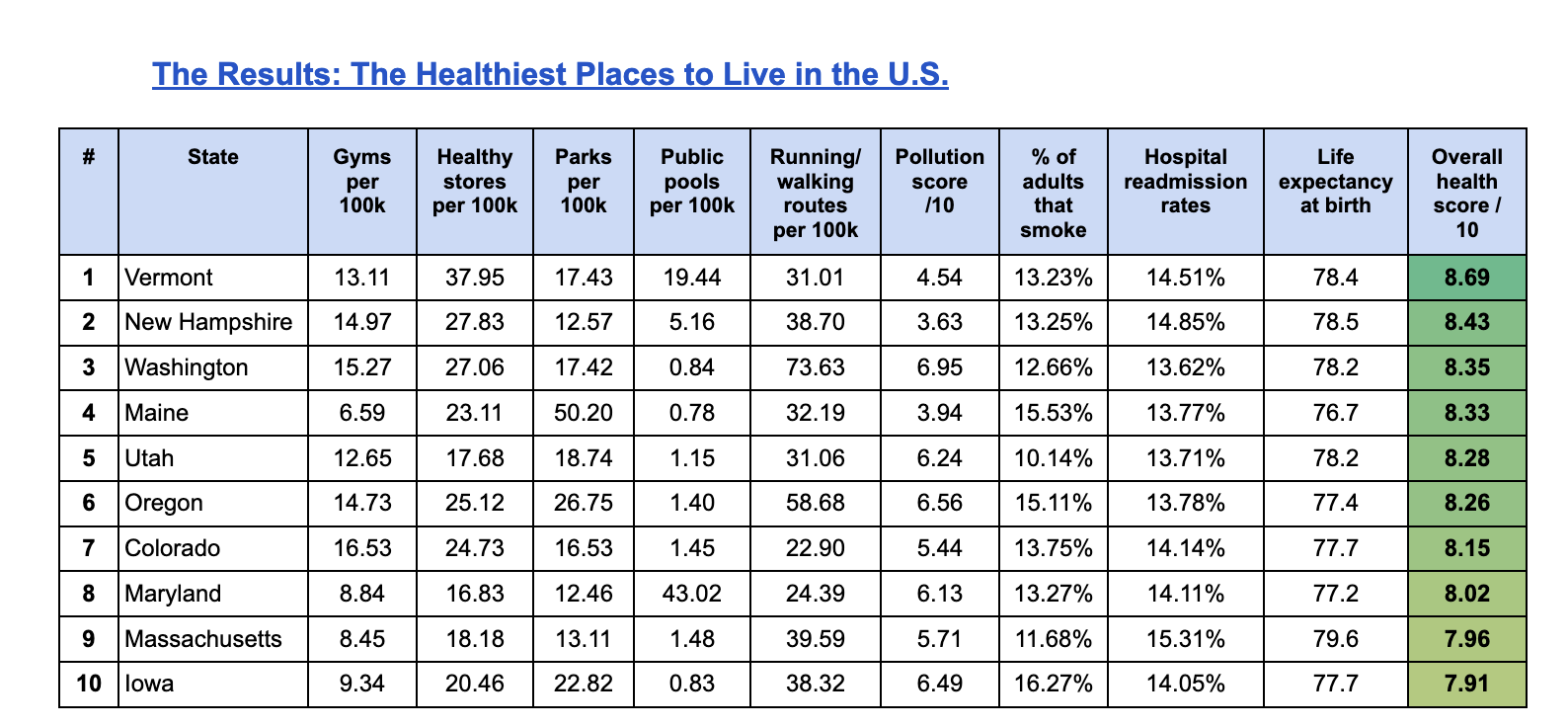

Measuring and proving ROI

Traffic and mentions matter less if they don’t deliver business results. The most persuasive evidence is conversions attributed to AI referrals. In GA4, segment key events by referral source to isolate quote requests, contact forms, and online sales from tools such as ChatGPT, Copilot and Perplexity.

In our GEO case study, conversions from AI referrals increased by more than 200% after we implemented consistent “context wrapping”. This linked GEO directly to revenue, making the value clear for stakeholders.

Context wrapping not only improves visibility, but it also increases measurable outcomes, from qualified leads to completed purchases.

Measuring GEO performance can be complex, especially when AI visibility relies on multiple teams and tools. If you’re considering external support to help structure reporting or integrate AI metrics, our guide on how to choose a GEO agency explains what to look for in an agency partner - from technical auditing to attribution frameworks that prove ROI.

A GEO audit can benchmark your AI visibility before you begin tracking changes, helping you understand which citations and coverage drive results, and which need improvement.

Get a GEO audit

The AI visibility tracker

Tracking AI visibility is still an emerging process, and every brand will define it slightly differently. The key is to track consistently and benchmark your position over time.

Use a single dashboard or spreadsheet to log activity across platforms, like below:

| Metric | How to measure | Frequency | Goal |

|---|---|---|---|

| AI citations | Manual testing in ChatGPT, Bing Copilot, and Perplexity | Monthly | Track inclusion rate |

| Referral traffic | GA4 + server logs | Monthly | Identify new AI-driven sessions |

| Coverage mentions | Ahrefs / Google Alerts | Monthly | Maintain consistent phrasing |

| Schema health | Rich Results Test | Quarterly | Ensure structured data validity |

| Data updates | Manual review | Quarterly | Keep facts current |

| Prompt testing | Custom queries | Monthly | Monitor appearance trends |

To make this easier, we've created an AI visibility tracker - a ready-to-use Google Sheet template to record prompts, citations, and LLM referrals, so you can measure exactly where you brand appears in AI search.

Download your free AI visibility tracker.

Download

Why measurement matters

Generative search has compressed visibility. A single AI answer might cite three brands out of hundreds competing for the same topic. If you’re not tracking how often you’re mentioned or where those mentions occur, you’re missing critical insight.

We treat GEO measurement as an evidence loop:

By monitoring AI visibility month by month, brands can identify which tactics - such as technical, on-site, or off-site (AiPR) - actually move the needle.

How to build your GEO reporting framework

Define baseline metrics

Define baseline metrics

Record your current visibility across AI tools before optimising.

Map activity to outcomes

Map activity to outcomes

Connect technical changes or PR launches to visibility spikes.

Review monthly

Review monthly

AI algorithms evolve fast - and measurement should too.

Set quarterly GEO goals

Set quarterly GEO goals

For example: “Appear in AI answers for three target queries within three months”.

Report to stakeholders

Report to stakeholders

Translate technical insights into clear commercial outcomes - such as visibility, leads, and reputation growth.

Brands that monitor citations, referrals and context regularly can demonstrate real commercial impact from GEO.

A practical framework to start

This month

- Create GA4 referral filters for ChatGPT, Bing Copilot, Perplexity and Gemini.

- Copy the prompt-testing sheet and add ten core prompts per service.

- Run your first tests, capture screenshots and set a recurring monthly reminder.

Next month

- Introduce an automated logger (or your chosen tool) to record citations.

- Build a simple Looker view showing AI sessions, key events and conversion rate.

- Review GSC for long natural-language queries and align planned content updates.

Quarterly

- Refresh statistics on your top pages, re-validate schema, and re-run all prompts.

- Correlate AiPR launches with changes in citations and referral conversions.

- Present a one-page GEO visibility scorecard to stakeholders with the month-on-month trend.

Download our LLM optimisation checklist to audit your on-site, off-site, and technical setup to benchmark readiness before your next GEO review.

Download

Next up

Read our GEO glossary to get clued up on terms such as AiPR, fan-outs, citations, context wrapping, and more. Or head back to the previous page showing GEO examples in action.

Back to the GEO playbook homepage